Abstract:

Architectural Image Captioning is a deep learning-based system designed to automatically generate meaningful textual descriptions for images of buildings and architectural structures. This project integrates Convolutional Neural Networks (CNNs) and Long Short-Term Memory (LSTM) networks to bridge the gap between computer vision and natural language processing. The visual features of images are extracted using a pre-trained InceptionV3 model, which converts each image into a 2048-dimensional feature vector. These extracted features are then passed to an LSTM network trained on a custom dataset of architectural images and corresponding captions. Each caption is preprocessed by converting to lowercase, removing punctuation, and adding <start> and <end> tokens to define sentence boundaries.

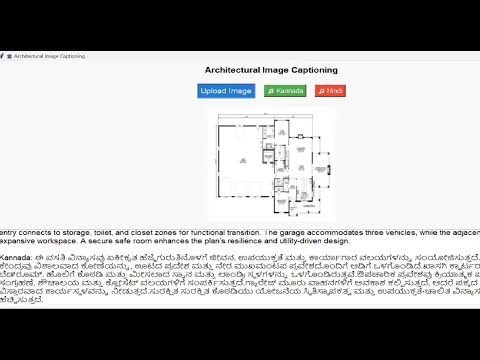

To enhance accessibility, the system incorporates multilingual Text-to-Speech (TTS) functionality using Google Translator and gTTS, enabling the generated captions to be audibly played in English, Kannada, and Hindi. A Tkinter-based GUI provides an interactive interface for users to upload images, view generated captions, and listen to them in their preferred language. Experimental results show that the system produces accurate and context-aware captions, contributing to architectural education, visual accessibility, and automated documentation. This project demonstrates the potential of combining deep learning with natural language processing for real-world multimedia applications.

Keywords:

Image Captioning, Convolutional Neural Network (CNN), Long Short-Term Memory (LSTM), InceptionV3, Deep Learning, Text-to-Speech (TTS), Multilingual Translation, Tkinter GUI, Architecture Recognition, Artificial Intelligence.

Introduction:

In recent years, the integration of computer vision and natural language processing (NLP) has revolutionized the way machines perceive and describe visual information. Among the most fascinating applications of this intersection is image captioning—the automatic generation of descriptive textual sentences from images. While general image captioning systems have gained significant attention, domain-specific captioning, such as architectural image captioning, remains a relatively underexplored yet highly impactful area. The goal of this project is to design and implement an intelligent system that not only generates meaningful captions for architectural images but also provides multilingual audio descriptions, making it accessible to a wider audience, including those with visual impairments or language barriers.

Architecture, being one of the most visually rich and culturally significant domains, presents unique challenges for image captioning systems. Unlike general object recognition, where models identify simple entities such as “dog,” “car,” or “tree,” architectural images involve complex visual features such as geometric patterns, structural designs, textures, materials, and styles that define specific architectural categories. For instance, distinguishing between “Gothic cathedral,” “modern glass skyscraper,” and “ancient stone temple” requires not only visual understanding but also contextual knowledge of architectural styles. Hence, an architecture-aware captioning system must combine deep visual understanding with linguistic fluency to produce accurate and contextually relevant descriptions.

To achieve this, the proposed system employs a hybrid deep learning model combining Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), specifically the Long Short-Term Memory (LSTM) architecture. CNNs, known for their exceptional ability to extract high-level spatial features from images, serve as the visual encoder, transforming images into compact numerical feature vectors that represent the architectural characteristics. These encoded features are then passed to the LSTM decoder, which sequentially generates words to form a grammatically coherent sentence. This encoder–decoder structure has become a foundational framework for modern image captioning systems, allowing the model to translate complex visual patterns into meaningful language.

The InceptionV3 network, pre-trained on the ImageNet dataset, is utilized as the CNN backbone in this project. This model is renowned for its deep architecture and efficient feature extraction capabilities, making it suitable for representing intricate visual structures such as those found in architectural imagery. By removing the final classification layer of InceptionV3, the system captures the deep visual embeddings of each image and feeds them into the LSTM network. The LSTM model is then trained on a specialized dataset containing architectural images paired with descriptive captions. The dataset undergoes preprocessing steps, including text normalization, punctuation removal, tokenization, and sequence padding, ensuring smooth learning and consistent caption generation.

A key innovation of this project is the integration of multilingual Text-to-Speech (TTS) functionality. While most captioning systems are limited to textual outputs, this system enhances accessibility by converting the generated captions into spoken language using Google Text-to-Speech (gTTS) and Google Translator APIs. The system supports English, Hindi, and Kannada, enabling users from diverse linguistic backgrounds to interact with the model effectively. This multilingual capability not only increases usability in multilingual regions like India but also enhances inclusivity for visually challenged users who rely on auditory descriptions.

The system is implemented with a Tkinter-based Graphical User Interface (GUI) that simplifies user interaction. Through this interface, users can upload architectural images, view the generated captions instantly, and choose the desired language for audio playback. The GUI ensures that even non-technical users can experience the power of deep learning–based image captioning with minimal effort. Moreover, the design emphasizes simplicity, real-time response, and user engagement, aligning with the project’s objective of creating a practical and interactive application.

From a broader perspective, the Architectural Image Captioning System demonstrates how artificial intelligence can contribute to multiple domains beyond traditional computer vision. In education, it can assist architecture students in analyzing design patterns and understanding stylistic elements of different structures. In heritage conservation, it can be used to automatically label and document historical monuments and heritage sites. For visually impaired users, it serves as an assistive tool, converting visual data into speech, thereby bridging the gap between visual content and accessibility. In real estate and urban planning, it can help automate the classification and tagging of building images for cataloging and retrieval purposes.

The proposed system also reflects the growing importance of multimodal learning—a paradigm that integrates multiple forms of data such as vision, text, and speech. By combining these modalities, the project showcases how machines can develop a holistic understanding of their environment, moving closer to human-like perception. The transition from image pixels to structured sentences and then to spoken language represents an advanced cognitive pipeline that aligns with current trends in artificial intelligence research.

Despite the advancements, building an accurate and context-aware architectural captioning model poses several challenges. The diversity of architectural forms, variability in lighting and angles, and limited availability of domain-specific caption datasets make the training process complex. Furthermore, generating natural-sounding multilingual audio output involves accurate translation and pronunciation, especially for languages like Kannada and Hindi, which have rich phonetic structures. Addressing these challenges required careful data preprocessing, model fine-tuning, and integration of robust translation and speech-generation modules.

The implementation of this system emphasizes transfer learning, sequence modeling, and language translation as key pillars. Transfer learning enables the reuse of pre-trained deep models like InceptionV3 to save computational resources and training time while maintaining high accuracy. Sequence modeling with LSTM ensures that the generated captions maintain grammatical structure and semantic continuity. Finally, the integration of Google Translator and gTTS provides an effective way to extend text outputs into multiple languages and speech formats.

In conclusion, this project presents a comprehensive framework that merges deep learning, natural language processing, and speech synthesis into a single, user-friendly application. The Architectural Image Captioning System not only demonstrates the technological power of AI in interpreting and describing visual data but also emphasizes inclusivity and accessibility. The multilingual feature broadens its usability across linguistic communities, while the deep learning backbone ensures robust performance and adaptability. As the field of AI continues to evolve, such systems will play a critical role in transforming how humans and machines perceive and communicate about the visual world.

• Demo Video

• Complete project

• Full project report

• Source code

• Complete project support by online

• Life time access

• Execution Guidelines

• Immediate (Download)

Software Requirements:

1. Python 3.7 and Above

2. NumPy

3. OpenCV

4. Scikit-learn

5. TensorFlow

6. Keras

Hardware Requirements:

1. PC or Laptop

2. 500GB HDD with 1 GB above RAM

3. Keyboard and mouse

4. Basic Graphis card

1. Immediate Download Online