ABSTRACT:

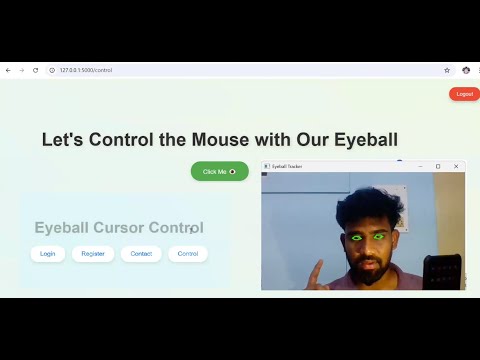

Traditional human-computer interaction methods, such as keyboards and mice, present accessibility challenges for individuals with motor impairments. This project introduces an innovative, hands-free cursor control system using eyeball movement detection, leveraging Raspberry Pi, OpenCV, and dlib's facial landmark detection. The proposed system tracks eye movements in real time and translates them into cursor movements, enabling seamless control of a computer without physical input devices.

The core functionality relies on a webcam-based eye-tracking system that captures facial landmarks, specifically focusing on the eyes. Using dlib’s pre-trained shape predictor, the system detects key points around the eyes and calculates the Eye Aspect Ratio (EAR) to determine blinking and gaze direction. Smooth cursor movement is achieved by mapping eye positions to screen coordinates with an adaptive filtering technique, reducing noise and improving accuracy. Additionally, blink-based interactions facilitate left and right clicks, double clicks, and scrolling, enhancing usability without external peripherals.

The implementation optimizes processing speed by reducing frame processing rates and utilizing efficient eye landmark extraction techniques. A dynamic scrolling mechanism adjusts speed based on gaze position, allowing for an intuitive browsing experience. The system is designed for deployment on a Raspberry Pi, making it cost-effective, compact, and accessible.

This project contributes to assistive technology, offering an effective solution for individuals with mobility impairments. Future improvements could integrate deep learning-based gaze estimation for enhanced precision and robustness across different lighting conditions and facial variations. The proposed system has the potential to redefine human-computer interaction by enabling hands-free, gaze-controlled navigation.

INTRODUCTION:

Human-computer interaction has traditionally relied on input devices such as keyboards, mice, and touchscreens. While these methods are effective for most users, they pose significant challenges for individuals with physical disabilities, such as motor impairments, paralysis, or conditions like ALS (Amyotrophic Lateral Sclerosis). For such individuals, alternative control methods are essential to ensure accessibility and usability. Eye-tracking technology has emerged as a promising approach to enabling hands-free interaction with computers. However, commercial eye-tracking systems are often expensive, require specialized hardware, and may not be accessible to all users.

This project introduces an innovative eyeball movement-based cursor control system that leverages Raspberry Pi and OpenCV. By employing computer vision and machine learning techniques, the system captures eye movements in real-time and translates them into cursor actions. The use of a webcam-based tracking system eliminates the need for expensive eye-tracking hardware, making it a cost-effective and accessible alternative. The key objective is to provide a seamless and intuitive hands-free computing experience, particularly for individuals with mobility impairments.

The system operates by detecting facial landmarks using dlib’s 68-point facial landmark detector and tracking eye movements to control the cursor. It calculates the Eye Aspect Ratio (EAR) to measure eye openness and identify blinks, which are then mapped to mouse actions such as left-clicking, right-clicking, and scrolling. Cursor movement is achieved by mapping eye positions to screen coordinates with an adaptive smoothing technique to enhance accuracy and reduce noise. Additionally, the system incorporates a dynamic scrolling mechanism based on gaze position, allowing users to navigate through web pages and documents effortlessly.

Designed to run efficiently on a Raspberry Pi, the system is optimized for real-time performance while ensuring low power consumption. By eliminating the need for physical input devices, this technology enhances accessibility and expands the possibilities of human-computer interaction. The project has broad applications, including assistive technology for individuals with disabilities, hands-free computing for professionals in hands-busy environments, gaming, virtual reality, and smart home automation.

• Demo Video

• Complete project

• Full project report

• Source code

• Complete project support by online

• Life time access

• Execution Guidelines

• Immediate (Download)

Software Requirements:

1. Python 3.7 and Above

2. NumPy

3. OpenCV

4. Scikit-learn

5. TensorFlow

6. Keras

Hardware Requirements:

1. PC or Laptop

2. 500GB HDD with 1 GB above RAM

3. Keyboard and mouse

4. Basic Graphis card

1. Immediate Download Online